There's an image problem with mobile app security. While it's critical for highly regulated industries like financial services, it is often overlooked in others. This usually comes down to development priorities, which typically fall into three categories: user experience, app performance, and app security. When dealing with finite resources such as time, shifting priorities, and team skill sets, engineering teams often have to prioritize one over the others. Usually, security is the odd man out ...

IT outages, caused by poor-quality software updates, are no longer rare incidents but rather frequent occurrences, directly impacting over half of US consumers. According to the 2024 Software Failure Sentiment Report from Harness, many now equate these failures to critical public health crises ...

In just a few months, Google will again head to Washington DC and meet with the government for a two-week remedy trial to cement the fate of what happens to Chrome and its search business in the face of ongoing antitrust court case(s). Or, Google may proactively decide to make changes, putting the power in its hands to outline a suitable remedy. Regardless of the outcome, one thing is sure: there will be far more implications for AI than just a shift in Google's Search business ...

In today's fast-paced digital world, Application Performance Monitoring (APM) is crucial for maintaining the health of an organization's digital ecosystem. However, the complexities of modern IT environments, including distributed architectures, hybrid clouds, and dynamic workloads, present significant challenges ... This blog explores the challenges of implementing application performance monitoring (APM) and offers strategies for overcoming them ...

Service disruptions remain a critical concern for IT and business executives, with 88% of respondents saying they believe another major incident will occur in the next 12 months, according to a study from PagerDuty ...

IT infrastructure (on-premises, cloud, or hybrid) is becoming larger and more complex. IT management tools need data to drive better decision making and more process automation to complement manual intervention by IT staff. That is why smart organizations invest in the systems and strategies needed to make their IT infrastructure more resilient in the event of disruption, and why many are turning to application performance monitoring (APM) in conjunction with high availability (HA) clusters ...

In today's data-driven world, the management of databases has become increasingly complex and critical. The following are findings from Redgate's 2025 The State of the Database Landscape report ...

With the 2027 deadline for SAP S/4HANA migrations fast approaching, organizations are accelerating their transition plans ... For organizations that intend to remain on SAP ECC in the near-term, the focus has shifted to improving operational efficiencies and meeting demands for faster cycle times ...

As applications expand and systems intertwine, performance bottlenecks, quality lapses, and disjointed pipelines threaten progress. To stay ahead, leading organizations are turning to three foundational strategies: developer-first observability, API platform adoption, and sustainable test growth ...

It never ceases to amaze me when I examine the curricula of specialist courses that there are either no prerequisites, or very minor ones. I feel that that the analogy above makes the case for having general IT knowledge, even for someone who wishes to specialize in an area of IT, such as Cybersecurity or Cloud computing ...

The surge in AI adoption amplifies the need for robust data center infrastructure to handle the terabytes of data being generated daily ... Still, as much as AI will benefit from data centers, data centers need observability solutions to ensure resiliency and sustainability so businesses can operate to their full potential and provide seamless experiences to customers ...

Today's IT environments are more complex than ever, with organizations managing an increasing number of applications, platforms, and systems. To maintain peak performance and ensure seamless digital experiences, businesses are turning to Artificial Intelligence for IT Operations (AIOps) ...

Observability has become a critical component of managing modern, complex systems, helping organizations ensure uptime, optimize performance, and quickly diagnose issues ... But the tide is shifting. With open-source projects stepping in to fill key parts of the observability stack, the market is on the brink of a major disruption ...

A reliable online shopping experience is becoming increasingly important to consumers, especially at checkout ... 26% of respondents said they would abandon an online purchase if they encountered a bug at any point during the experience ...

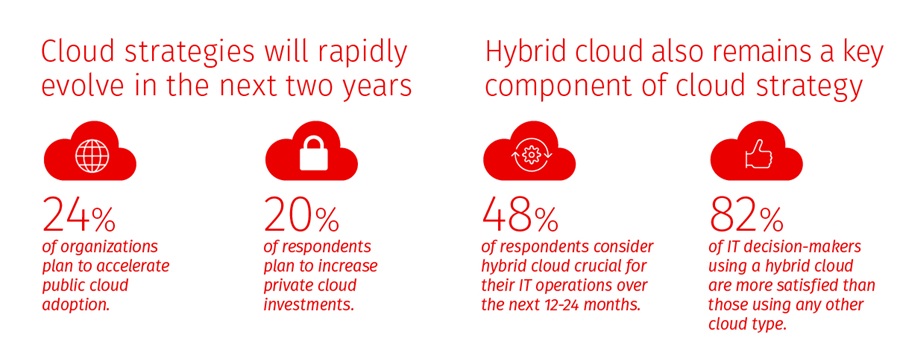

Organizations continue to shift away from a single cloud approach toward more flexible hybrid cloud environments, according to the 2025 State of Cloud Report, conducted by Rackspace Technology ...