An effective breakpoint strategy helps deliver sharp, properly sized images, which are some of the most compelling pieces of content on a web page. Lack of such a strategy can lead to jagged images or ones that take too long to render due to excessive size, potentially reducing the overall effectiveness of web pages — and driving down the quality of the user experience.

Creation of derivative images at properly determined breakpoints is critical but often challenging for web developers and designers to achieve. Generating the right number of variants — and at the correct widths and spacings — for their users' critical devices can provide a great balance between byte savings and cache dilution.

While it's true that having too few breakpoints can improve offload, it can also deliver many unused bytes and place an unnecessarily high rendering burden on the device and browser. At the same time, when there are too many breakpoints, it may result in the opposite effect. Finding the right balance mandates some time and resource commitment, but the benefits are truly worth the exercise.

In this 2-part blog, we will explore just how significant image breakpoints are to businesses, and some important device-related factors to consider in image breakpoint decisions — from screen resolution market share and user base, to device characteristics to pixel density — to deliver the optimally-sized web image every time.

The Byte Loss Breakdown

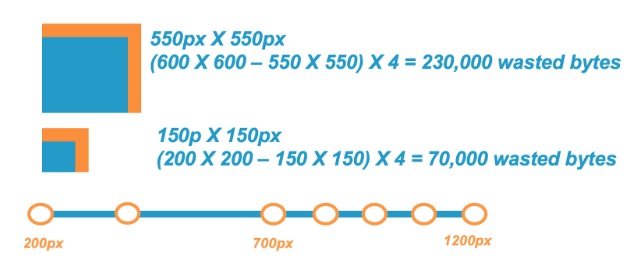

Getting image breakpoints right is especially important at larger image widths. To illustrate, let's look at this example in which a web page is displaying two images that need to be resized by the browser 50 pixels in both height and width:

As you can see, delivering a 200 X 200 pixel (px) image for a 150 X 150 px use case (50 extra pixels in width and height) results in 70,000 wasted bytes required in memory for the browser to display the image than if the image were delivered at 150 X 150 px.

When looking at the larger image example, delivering a 600 X 600 px image instead of a 550 X 550 px use case (still 50 extra pixels in height and width) resulted in 230,000 wasted bytes, or 3.3-times more wasted bytes. This illustrates why it's recommended to have more breakpoints at larger image sizes (in this case, images larger than 700 px in width).

Another example to consider involves displaying images on a typical smartphone, in this case, the Samsung Galaxy S5. This model has a screen resolution of 1080 X 1920 px. Take, for example, a server sending an image of 1200 X 2000 px dimensions that must be resized to 1080 X 1920 px. This would result in more than 1,305,600 bytes — that's 1.3 MB — waste, not to mention a significant degradation in user experience.

Read Image Optimization Best Practices for Device Breakpoints - Part 2, covering 4 tips for getting image breakpoints right.

The Latest

Broad proliferation of cloud infrastructure combined with continued support for remote workers is driving increased complexity and visibility challenges for network operations teams, according to new research conducted by Dimensional Research and sponsored by Broadcom ...

New research from ServiceNow and ThoughtLab reveals that less than 30% of banks feel their transformation efforts are meeting evolving customer digital needs. Additionally, 52% say they must revamp their strategy to counter competition from outside the sector. Adapting to these challenges isn't just about staying competitive — it's about staying in business ...

Leaders in the financial services sector are bullish on AI, with 95% of business and IT decision makers saying that AI is a top C-Suite priority, and 96% of respondents believing it provides their business a competitive advantage, according to Riverbed's Global AI and Digital Experience Survey ...

SLOs have long been a staple for DevOps teams to monitor the health of their applications and infrastructure ... Now, as digital trends have shifted, more and more teams are looking to adapt this model for the mobile environment. This, however, is not without its challenges ...

Modernizing IT infrastructure has become essential for organizations striving to remain competitive. This modernization extends beyond merely upgrading hardware or software; it involves strategically leveraging new technologies like AI and cloud computing to enhance operational efficiency, increase data accessibility, and improve the end-user experience ...

AI sure grew fast in popularity, but are AI apps any good? ... If companies are going to keep integrating AI applications into their tech stack at the rate they are, then they need to be aware of AI's limitations. More importantly, they need to evolve their testing regiment ...

If you were lucky, you found out about the massive CrowdStrike/Microsoft outage last July by reading about it over coffee. Those less fortunate were awoken hours earlier by frantic calls from work ... Whether you were directly affected or not, there's an important lesson: all organizations should be conducting in-depth reviews of testing and change management ...

In MEAN TIME TO INSIGHT Episode 11, Shamus McGillicuddy, VP of Research, Network Infrastructure and Operations, at EMA discusses Secure Access Service Edge (SASE) ...

On average, only 48% of digital initiatives enterprise-wide meet or exceed their business outcome targets according to Gartner's annual global survey of CIOs and technology executives ...

Artificial intelligence (AI) is rapidly reshaping industries around the world. From optimizing business processes to unlocking new levels of innovation, AI is a critical driver of success for modern enterprises. As a result, business leaders — from DevOps engineers to CTOs — are under pressure to incorporate AI into their workflows to stay competitive. But the question isn't whether AI should be adopted — it's how ...