If you watch enough Western films (cowboys etc.), you will have come across a mountain man, henceforth called "M." This rugged person lived in the mountainous areas of the USA and made his living by obtaining animal pelts to sell to the fashionable ladies in the East.

M was the master of his territory and knew enough about the flora, fauna and geology of the land to know what to eat and what not to eat, steer clear of the wild animals, trap them and to avoid falling into holes or off cliffs. He also knew the weather patterns very well.

There were botanists, naturalists and geologists who visited these mountain areas for study purposes and their knowledge far exceeded that of M in their respective areas of study, although they did not know the territory’s geography and weather vagaries as well as M.

In their diverse studies at various times, the flora expert fell off a cliff, the geologist was poisoned by a plant and the fauna expert died of cold on their respective expeditions. M found them and gave them each a decent burial, with the usual two wooden sticks tied in the shape of a cross on their graves. He also said the few words from the bible over each grave.

So, what has this to do with it IT, I hear you ask? Everything is the answer. The flora, fauna and geology people represent specialists without a general underpinning knowledge of the IT "territory." The vagaries of the weather, the abundance of animals and plants represent the jobs in IT. The jobs "mutate," like the weather changes, and other perils lurk in areas of knowledge outside their own.

The visitors would have been sensible to ask M to accompany then on their visits or study the territory and its "contents" well before embarking on their, ultimately fatal, expeditions. It never ceases to amaze me when I examine the curricula of specialist courses that there are either no prerequisites, or very minor ones, just as M felt when he saw these "dudes" on his territory. The IT equivalents of these deaths is the 70% failure rate of IT projects.

Cybersecurity

It never ceases to amaze me when I examine the curricula of specialist courses that there are either no prerequisites, or very minor ones. I feel that that the analogy above makes the case for having general IT knowledge, even for someone who wishes to specialize in an area of IT, such as Cybersecurity or Cloud computing.

I have seen an advertisement for a cybersecurity course along the lines; "Become a cybersecurity expert in 16 hours with our course; $99, was $299," followed by the story of an accountant who took it an became an expert. This is La La Land, and may explain the fact that the "bad guys" seem to have the upper hand.

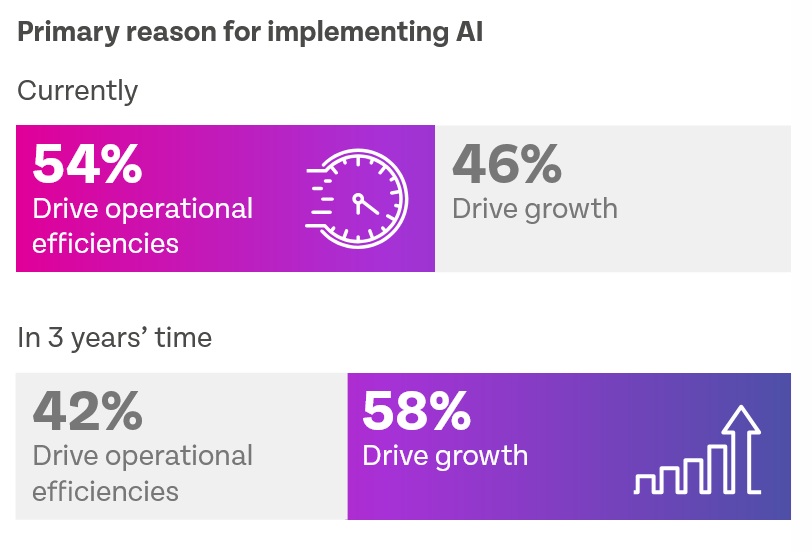

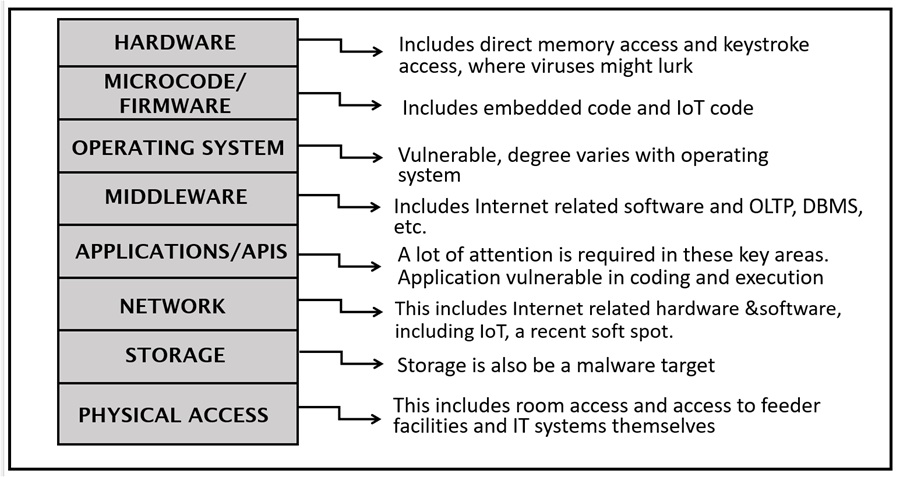

Figure 1: Cybersecurity: All These Areas are Vulnerable

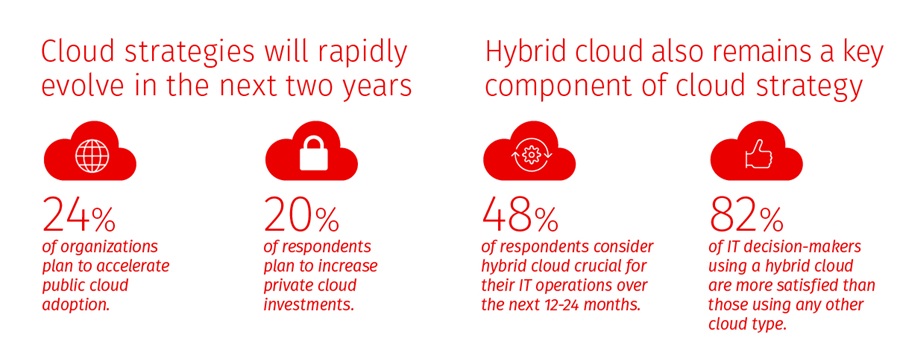

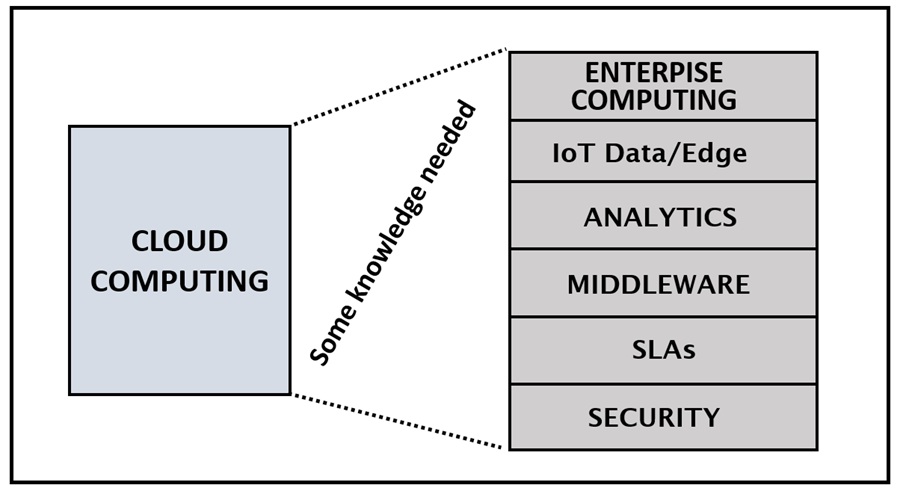

Cloud Computing

Cloud computing is data center computing on steroids, the latter environment dragging people into the general work and knowledge that surrounds that computing environment, giving them a broader knowledge of it. It is advantageous to learn about that environment before entering it, is it not?

Figure 2: The Cloud Computing Ecosphere Scope

It should be self-evident that this environment, whatever role one has, that a broad knowledge of its composite nature is necessary to succeed.

Application Development

School computer education, and to some extent University, suggest that computing is about coding (in Python) and computational thinking. What one is supposed to be thinking about is not made clear.

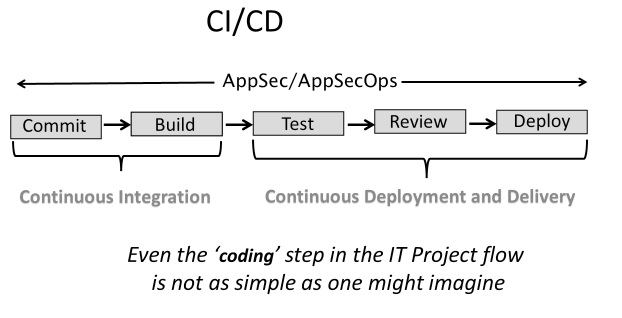

The application development environment comprises (among other things):

- Coding in one or more languages

- Security aspects of applications

- The whole process of design/ code/test/recode, often called CI/CD – continuous improvement/continuous deployment

- Methodologies – agile, scrum, DevOps, DevSecOps and others

- Project management, milestones, reviews and other controls

Incidentally, "test" in the diagram above is not a single item but includes unit tests, integration tests and functional tests and there may be other tests depending on the work in hand, up to 16 in fact.

In short, development is much, much more than coding, which may come as a surprise to many people and organizations. Remember also, that "development" is only part of the IT application ecosphere.

Summary

Long experience in IT, both at the coal face, in the trenches, researching and writing about IT leads me to the conclusion that there is a need for a form of general IT education outside anything on offer today. The latter comprises mainly computer science (CS), "IT Fundamentals," specialisms and "boot camps."

None of these cover the IT terrain which characterizes modern workplace IT, which has always evolved and which today is seeing a tectonic shift caused by AI (artificial intelligence) and its derivatives. One will look in vain for coverage of high performance and mainframe computing, graphics. IoT, edge computing and key methodologies which make IT projects tick.

It is time for a change.